NASA’s Kepler Space Telescope, despite being hobbled by the loss of critical guidance systems, has discovered a star with three planets only slightly larger than Earth. The outermost planet orbits in the "Goldilocks" zone, a region where surface temperatures could be moderate enough for liquid water — and perhaps life — to exist.

The star, EPIC 201367065, is a cool red M-dwarf about half the size and mass of our own sun. At a distance of 150 light-years, the star ranks among the top 10 nearest stars known to have transiting planets. The star’s proximity means it is bright enough for astronomers to study the planets’ atmospheres, to determine whether they are like Earth’s atmosphere and possibly conducive to life.

"A thin atmosphere made of nitrogen and oxygen has allowed life to thrive on Earth. But nature is full of surprises. Many exoplanets discovered by the Kepler mission are enveloped by thick, hydrogen-rich atmospheres that are probably incompatible with life as we know it," said Ian Crossfield, the University of Arizona astronomer who led the study.

A paper describing the find by astronomers at the UA, the University of California, Berkeley, the University of Hawaii, Manoa, and other institutions has been submitted to Astrophysical Journal and is freely available on the

arXiv website. NASA and the National Science Foundation funded the research.

Co-authors of the paper include Travis Barman, a UA associate professor of planetary sciences, and Joshua Schlieder of the NASA Ames Research Center and colleagues from Germany, the United Kingdom and the U.S.

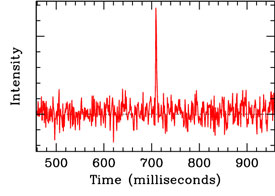

The three planets are 2.1, 1.7 and 1.5 times the size of Earth. The smallest and outermost planet, at 1.5 Earth radii, orbits far enough from its host star that it receives levels of light from its star similar to those received by Earth from the sun, said UC Berkeley graduate student Erik Petigura. He discovered the planets Jan. 6 while conducting a computer analysis of the Kepler data NASA has made available to astronomers. In order from farthest to closest to their star, the three planets receive 10.5, 3.2 and 1.4 times the light intensity of Earth, Petigura calculated.

"Most planets we have found to date are scorched. This system is the closest star with lukewarm transiting planets," Petigura said. "There is a very real possibility that the outermost planet is rocky like Earth, which means this planet could have the right temperature to support liquid water oceans."

University of Hawaii astronomer Andrew Howard noted that extrasolar planets are discovered by the hundreds these days, although many astronomers are left wondering if any of the newfound worlds are really like Earth. The newly discovered planetary system will help resolve this question, he said.

"We’ve learned in the past year that planets the size and temperature of Earth are common in our Milky Way galaxy," Howard said. "We also discovered some Earth-size planets that appear to be made of the same materials as our Earth, mostly rock and iron."

Kepler’s K2 Mission

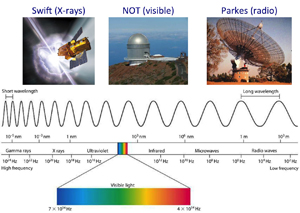

After Petigura found the planets in the Kepler light curves, the team quickly employed telescopes in Chile, Hawaii and California to characterize the star’s mass, radius, temperature and age. Two of the telescopes involved — the Automated Planet Finder on Mount Hamilton near San Jose, California, and the Keck Telescope on Mauna Kea, Hawaii — are University of California facilities.

The next step will be observations with other telescopes, including the Hubble Space Telescope, to take the spectroscopic fingerprint of the molecules in the planetary atmospheres. If these warm, nearly Earth-size planets have puffy, hydrogen-rich atmospheres, Hubble will see the telltale signal, Petigura said.

The discovery is all the more remarkable, he said, because the Kepler telescope lost two reaction wheels that kept it pointing at a fixed spot in space.

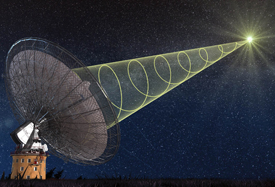

Kepler was reborn in 2014 as "K2" with a clever strategy of pointing the telescope in the plane of Earth’s orbit, the ecliptic, to stabilize the spacecraft. Kepler is now back to mining the cosmos for planets by searching for eclipses or "transits," as planets pass in front of their host stars and periodically block some of the starlight.

"This discovery proves that K2, despite being somewhat compromised, can still find exciting and scientifically compelling planets," Petigura said. "This ingenious new use of Kepler is a testament to the ingenuity of the scientists and engineers at NASA. This discovery shows that Kepler can still do great science."

Kepler sees only a small fraction of the planetary systems in its gaze: only those with orbital planes aligned edge-on to our view from Earth. Planets with large orbital tilts are missed by Kepler. A census of Kepler planets the team conducted in 2013 corrected statistically for these random orbital orientations and concluded that one in five sunlike stars in the Milky Way galaxy has Earth-size planets in the habitable zone. Accounting for other types of stars as well, there may be 40 billion such planets galaxywide.

The original Kepler mission found thousands of small planets, but most of them were too faint and far away to assess their density and composition and thus determine whether they were high-density, rocky planets like Earth or puffy, low-density planets like Uranus and Neptune. Because the star EPIC-201 is nearby, these mass measurements are possible. The host star, an M-dwarf, is less intrinsically bright than the sun, which means that its planets can reside close to the host-star and still enjoy lukewarm temperatures.

According to Howard, the system most like that of EPIC-201 is Kepler-138, an M-dwarf star with three planets of similar size, though none are in the habitable zone.